AI-Powered Prompt Enhancement: Automating Optimization at Scale

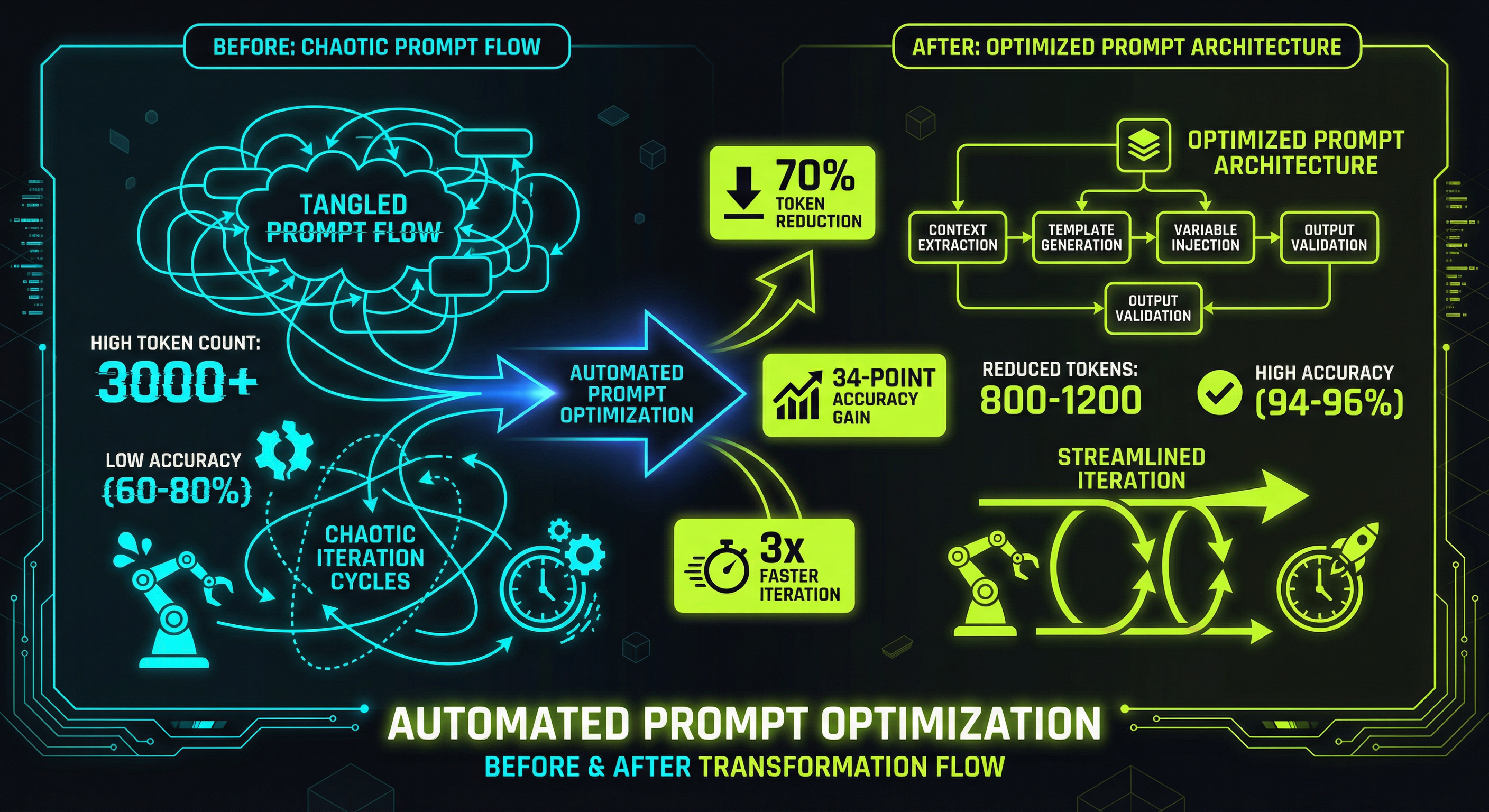

Stop manually tweaking prompts. Automated optimization delivers 70% cost reduction, 34-point accuracy improvements, and 3x faster iteration. The tools exist—DSPy, native LLM tools, and platforms like Promptsy make it accessible.

Key Takeaways

- Manual prompt optimization doesn't scale—60-80% of dev time wasted on iteration

- Real companies winning big: 70% token reduction, 34-point accuracy gains, 3x faster iteration

- Tools are production-ready: DSPy/MIPRO, native LLM tools, Promptsy Optimize/Expand/Tighten

Automated Prompt Optimization: The Shift from Craft to Engineering

Here's the thing about prompt engineering at scale: it breaks.

Not dramatically. Not all at once. But somewhere around your 50th prompt, your 100th deployment, your third team trying to ship features—manual optimization stops working. You're spending 60-80% of development time tweaking prompts. Token costs are climbing. Quality is... variable. And when something breaks in production at 2am, nobody can tell you why that prompt worked in the first place.

I've seen this pattern dozens of times. The solution isn't hiring more prompt engineers. It's building systems that optimize systematically.

The Problem: Manual Doesn't Scale

Let me be blunt. Manual prompt engineering creates three production risks:

No reproducibility. One engineer tweaks a prompt, ships it, moves on. Six months later, someone needs to change it. There's no documentation of what was tried, what failed, what the tradeoffs were. You're starting from scratch.

No measurement. How do you know your "improved" prompt is actually better? In most shops, the answer is vibes. Maybe some spot-checking. That's not engineering—that's hope.

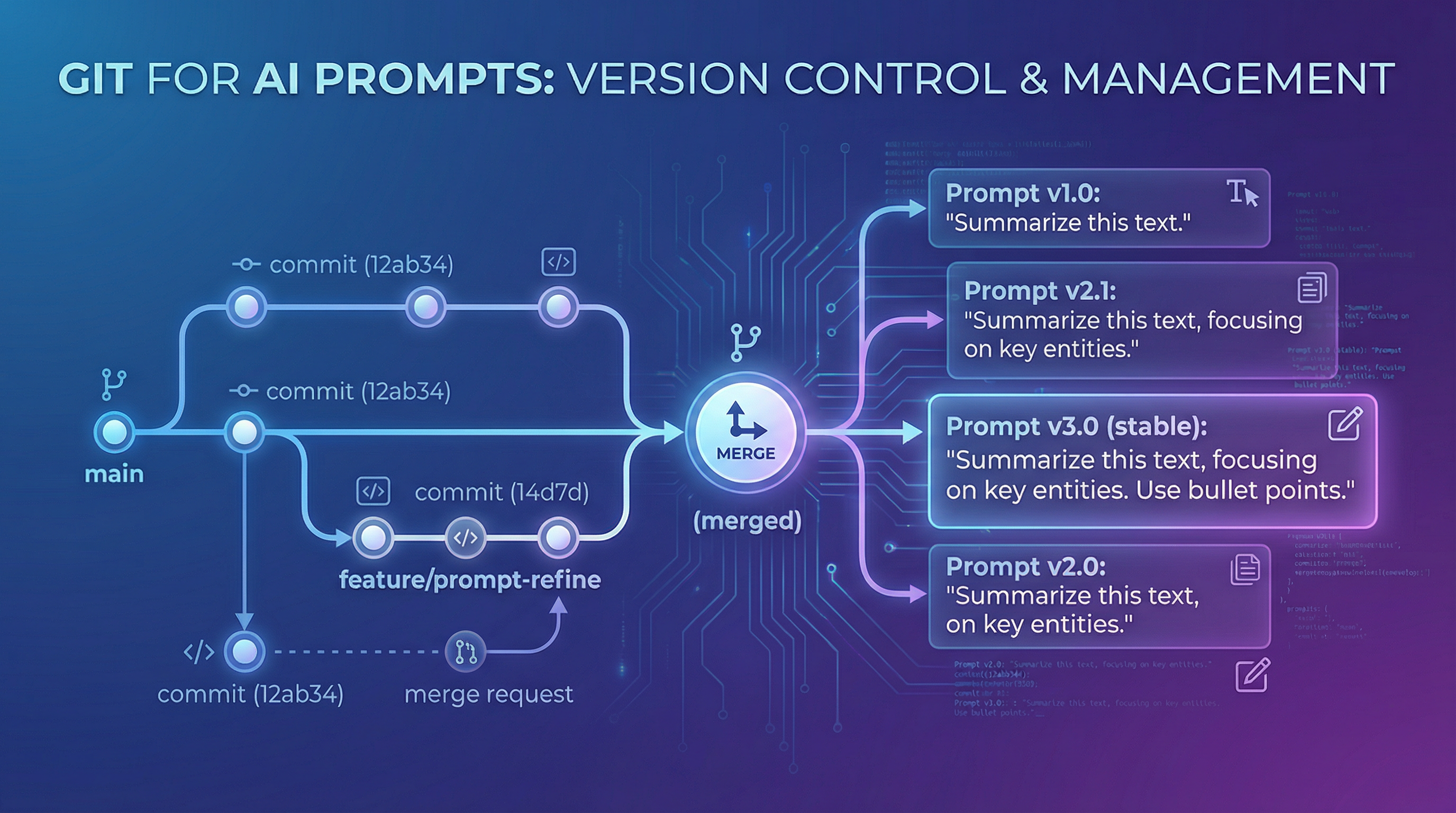

No rollback plan. What happens when your optimized prompt degrades in production? Can you revert in 60 seconds? Do you even know it degraded?

The data backs this up. Teams spend 60-80% of development time on prompt iteration. Meanwhile, 84% of developers are using AI tools, but almost none have a structured methodology for improvement.

BrainBox AI was burning 4,000 tokens per request across 30,000 buildings. Show me the cost-per-task on that. Crypto.com's AI assistant started at 60% accuracy with no clear path forward. Gorgias couldn't scale their prompt team fast enough—they'd created a bottleneck where ML engineers touched every change.

The pattern is clear: manual optimization is a team-of-one problem disguised as a scaling problem.

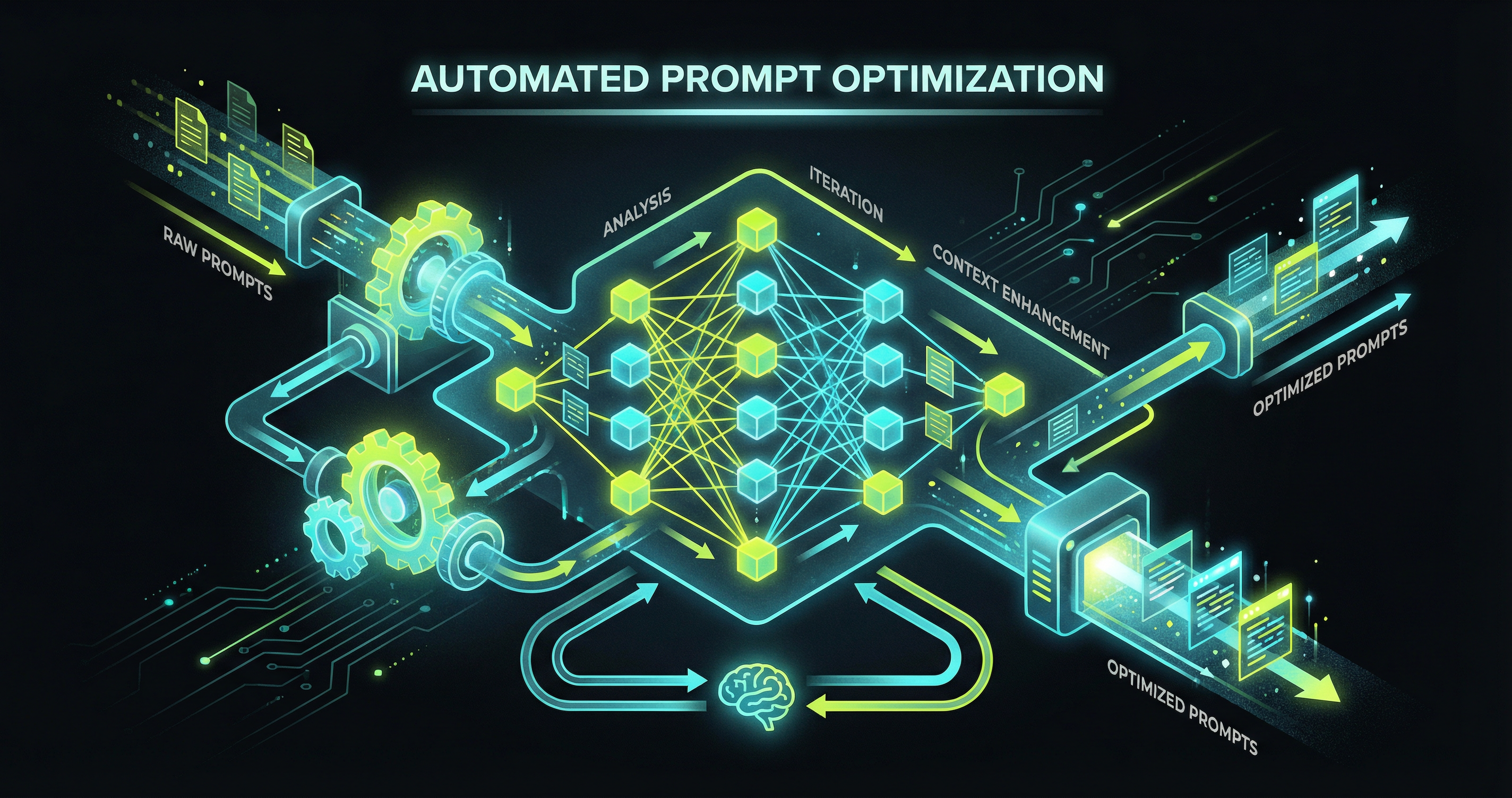

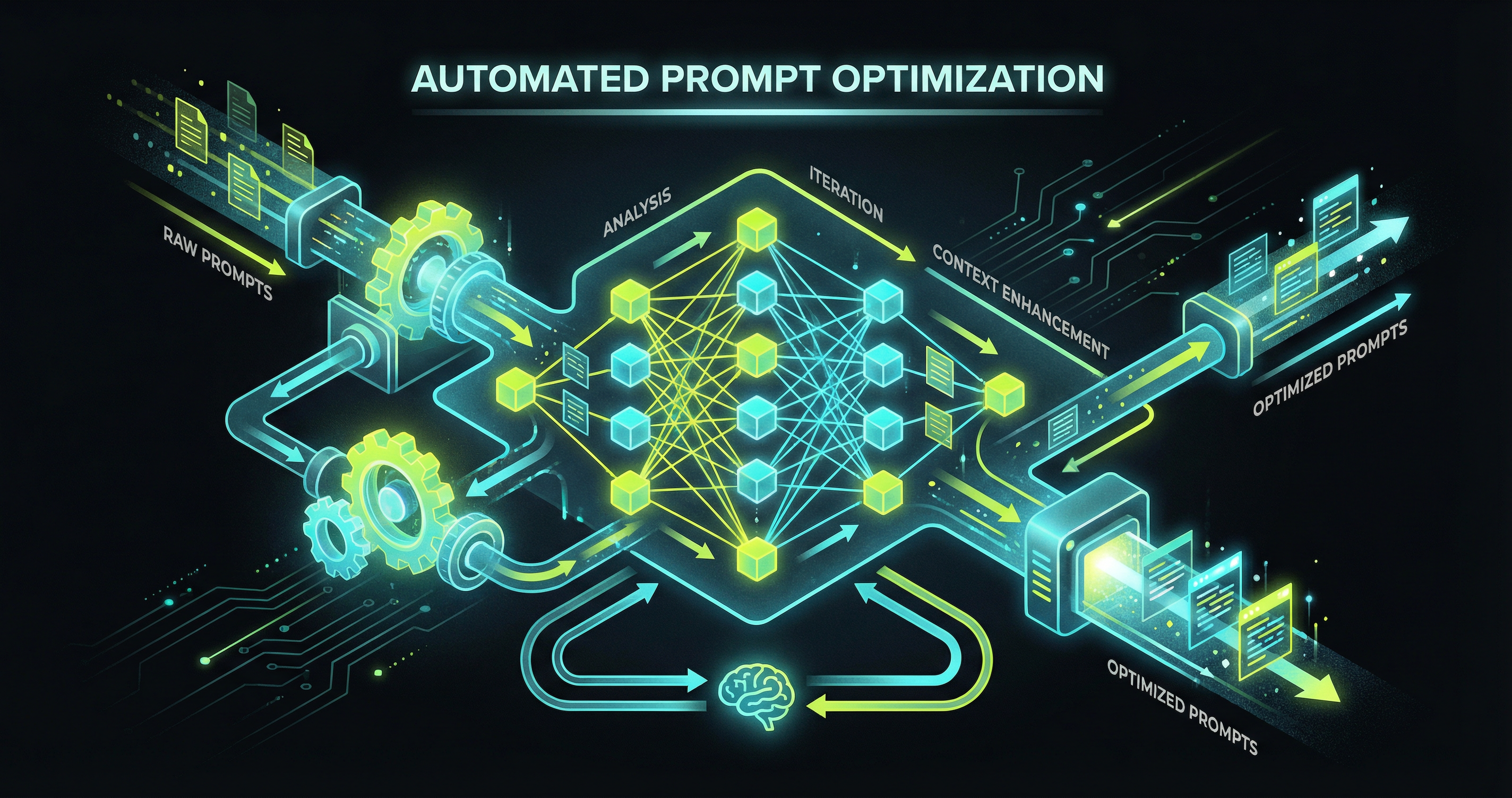

What Automated Prompt Optimization Actually Does

Automated prompt optimization (APO) is just systematic engineering applied to prompts. You define success metrics. You run experiments. You measure outcomes. You document what works.

Think of it like the difference between debugging by intuition versus debugging with instrumentation. You could guess why your system is slow. Or you could look at the traces and know.

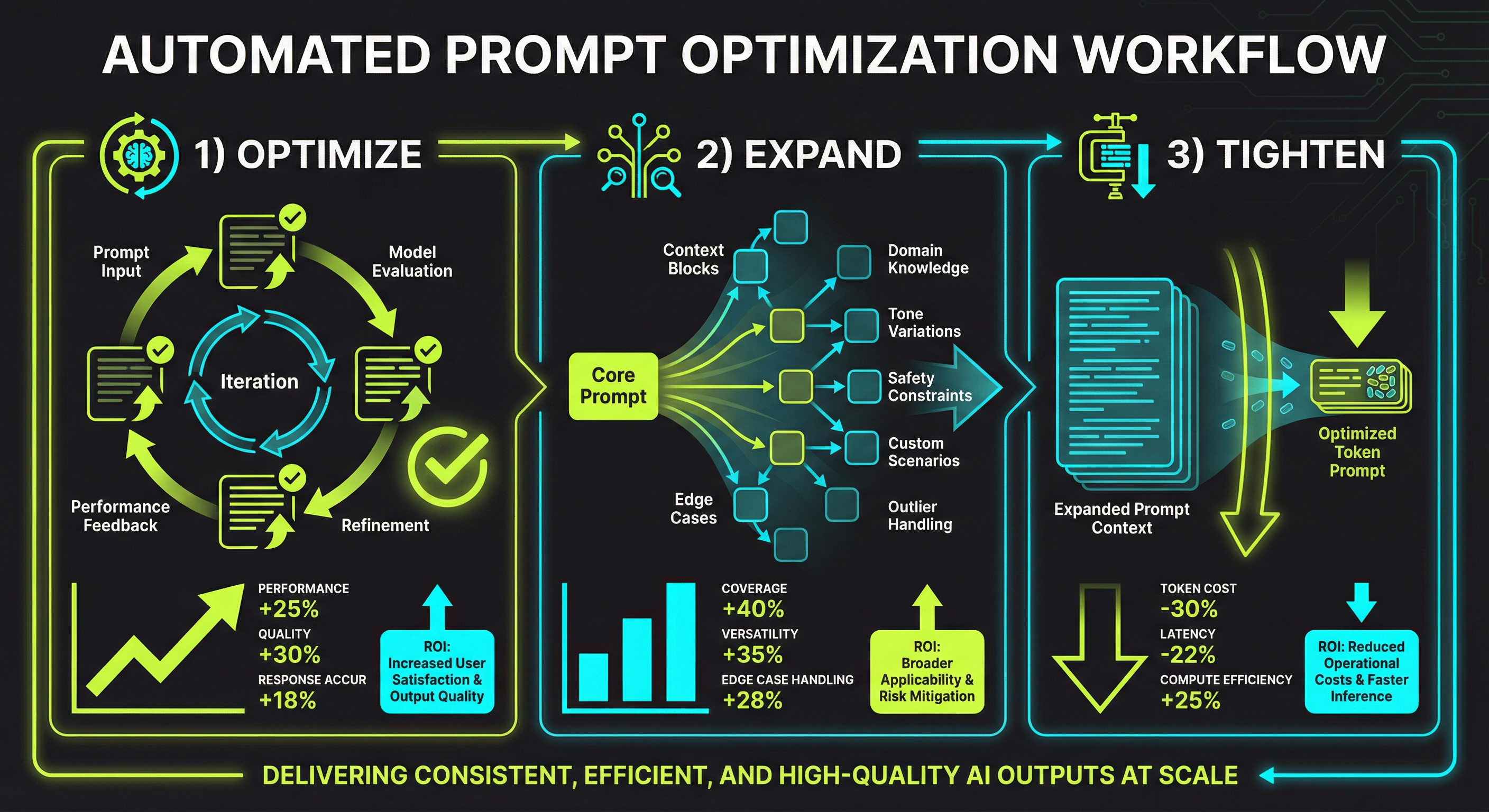

APO typically works in three stages:

Optimize: Identify underperforming prompts and refine them systematically. Crypto.com did this manually—10 deliberate iterations, each targeting specific failure modes, improving from 60% to 94% accuracy. APO automates that iteration loop.

Expand: Add context and edge case coverage when prompts drift. This happens constantly—data distributions shift, requirements change, and suddenly your carefully tuned prompt fails on cases it never saw. Expansion addresses drift before it becomes a production incident.

Tighten: Reduce token consumption while maintaining quality. The Minimum Viable Tokens approach. Caylent took a 3,000-token prompt down to 890 while improving accuracy from 82% to 96%. That's not a tradeoff—that's finding the actual signal in the noise.

The key: these stages are measurable, repeatable, and documentable. You can run them in CI. You can A/B test changes. You can roll back when something breaks.

Real Numbers, Not Theory

I don't trust frameworks without production data. Here's what actually shipped:

Caylent + BrainBox AI: 4,000 tokens → 1,200 tokens. 70% reduction. Response times dropped. Costs dropped. Quality improved. Can we roll this back? They could—because they built the system to support it.

Crypto.com: 60% accuracy → 94% accuracy. A 34-point improvement through systematic iteration, not model changes. They documented every round, built institutional knowledge, created a repeatable process.

Gorgias: Eliminated ML bottlenecks entirely. Product managers and prompt engineers iterate independently. Teams move 3x faster. Debugging time dropped 50%.

Meta-prompting adopters: 65% reduction in prompt development time. 120% improvement in output consistency. 340% improvement in AI initiative ROI.

These aren't cherrypicked. They're the floor for companies treating this seriously.

Three Production Patterns That Work

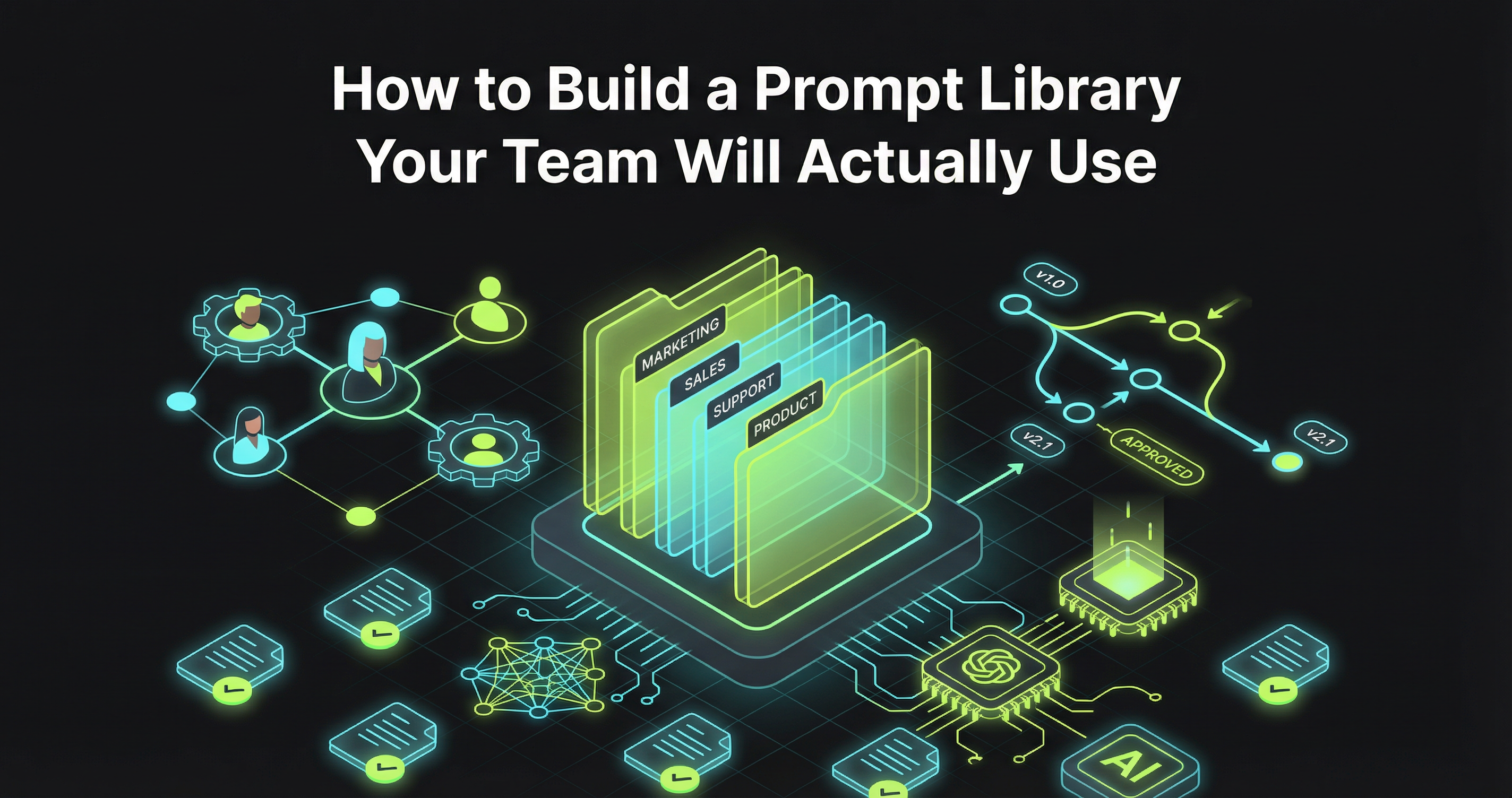

Pattern 1: Gorgias' Autonomous Model

Gorgias runs millions of support interactions. Their insight: the bottleneck wasn't prompt quality, it was who could touch prompts.

They deployed a central management platform, split their orchestration into modular agents (summarization, filtering, QA—each testable independently), and built automated evaluation pipelines. Now non-ML teams iterate autonomously. Zero blocking dependencies.

The win: scaling prompt engineering without scaling the senior ML team proportionally.

Pattern 2: Caylent's Cost-Quality Flywheel

Most teams treat cost and quality as opposing forces. Caylent proved they're not.

Systematic refinement. Strategic caching (90% cost reduction for repeated sections). Batch inference (50% immediate savings). The result: lower costs and higher accuracy. Simultaneously.

One prompt: 3,000 tokens → 890 tokens. Accuracy: 82% → 96%. That's not compromise—that's engineering.

Pattern 3: Crypto.com's Iterative Refinement

10 rounds of systematic improvement. Each round targeted specific failure cases they'd instrumented. They didn't guess what was wrong—they measured.

The result: a sustainable improvement loop that compounds. Document what worked. Feed it into the next iteration. Build institutional knowledge.

The win: compounding returns instead of one-off fixes.

The Tooling Landscape

You don't need to build this from scratch. Options exist:

DSPy/MIPRO: Stanford's framework uses Bayesian optimization to search prompt space automatically. Improved evaluation accuracy from 46.2% to 64.0% without manual intervention. You define success; it finds the path.

Native provider tools: OpenAI's Prompt Optimizer (April 2025), Anthropic's generation tools. Feed them graded outputs; they suggest refinements.

Purpose-built platforms: Promptsy's Optimize/Expand/Tighten workflow. PromptLayer for team-scale management. These handle the plumbing so you focus on evaluation criteria.

Meta-prompting: Using LLMs to optimize other LLMs' prompts. Early-stage but promising for exploration.

The common requirement: you need success metrics and evaluation data. The system handles the rest.

Implementation Path

Week 1: Instrument. Pick your highest-cost or lowest-performing prompts. Measure baseline: token count, accuracy, latency. You can't optimize what you can't measure.

Week 2: Build evaluation data. Collect 20-50 examples of success and failure. Real production cases if possible. This is your test suite.

Weeks 3-4: Run optimization cycles. Pick a tool. Run one cycle. Measure delta. Document changes. Ship what works. Repeat.

Month 2+: Scale the pattern. Document what you learned. Enable other teams to run the same process. This is where Gorgias made their leap—from individual wins to institutional capability.

One thing I always include: a rollback plan. Every optimization change should be revertible in under 60 seconds. If you can't roll back, you're not engineering—you're gambling.

Why Now

The market signal is clear. Prompt engineering market: $380 billion in 2024, projected $6.5 trillion by 2034. Prompt engineer demand: up 135.8% in 2025.

But here's the thing: companies aren't struggling because they lack prompt engineers. They're struggling because manual optimization doesn't compound. Hiring more people to do the same inefficient process just multiplies the inefficiency.

The providers have noticed. OpenAI, Anthropic, Google—all releasing native optimization tools. The message: we expect you to optimize your prompts, not just write them.

Manual craft got us here. Systematic engineering gets us to scale.

FAQ

How long does this take? Single prompt: 1-2 weeks with good evaluation data. Portfolio of 50-100 prompts: 2-3 months to establish patterns and train the team.

Do I need labeled data? You need examples of correct vs incorrect outputs. Not massive datasets—20-50 quality examples reveal most improvement opportunities.

What about provider lock-in? Good tools support multiple providers. Cross-model optimization is an emerging specialty—important as models improve and you need flexibility to switch.

What about interpretability? Real concern. Optimized prompts can be less readable. This is why documentation matters: capture why each change was made so future engineers understand the reasoning.

Key Takeaways:

- Manual prompt optimization doesn't scale—it creates measurement gaps, reproducibility problems, and operational risk

- Real production deployments show 70% token reduction, 34-point accuracy gains, and 3x faster iteration with systematic approaches

- The tools exist: DSPy/MIPRO for advanced optimization, native LLM tools for quick wins, platforms like Promptsy for team-scale workflows

If you're still manually tweaking prompts, you're leaving cost savings and accuracy gains on the table. The companies setting the standard in 2025 stopped guessing years ago.

Samira El-Masri is Lead AI Engineer at Promptsy, where she builds the production ML systems that power the platform. She writes about AI infrastructure, retrieval systems, and making AI that survives production.

Stay ahead with AI insights

Get weekly tips on prompt engineering, AI productivity, and Promptsy updates delivered to your inbox.

No spam. Unsubscribe anytime.

Related Articles

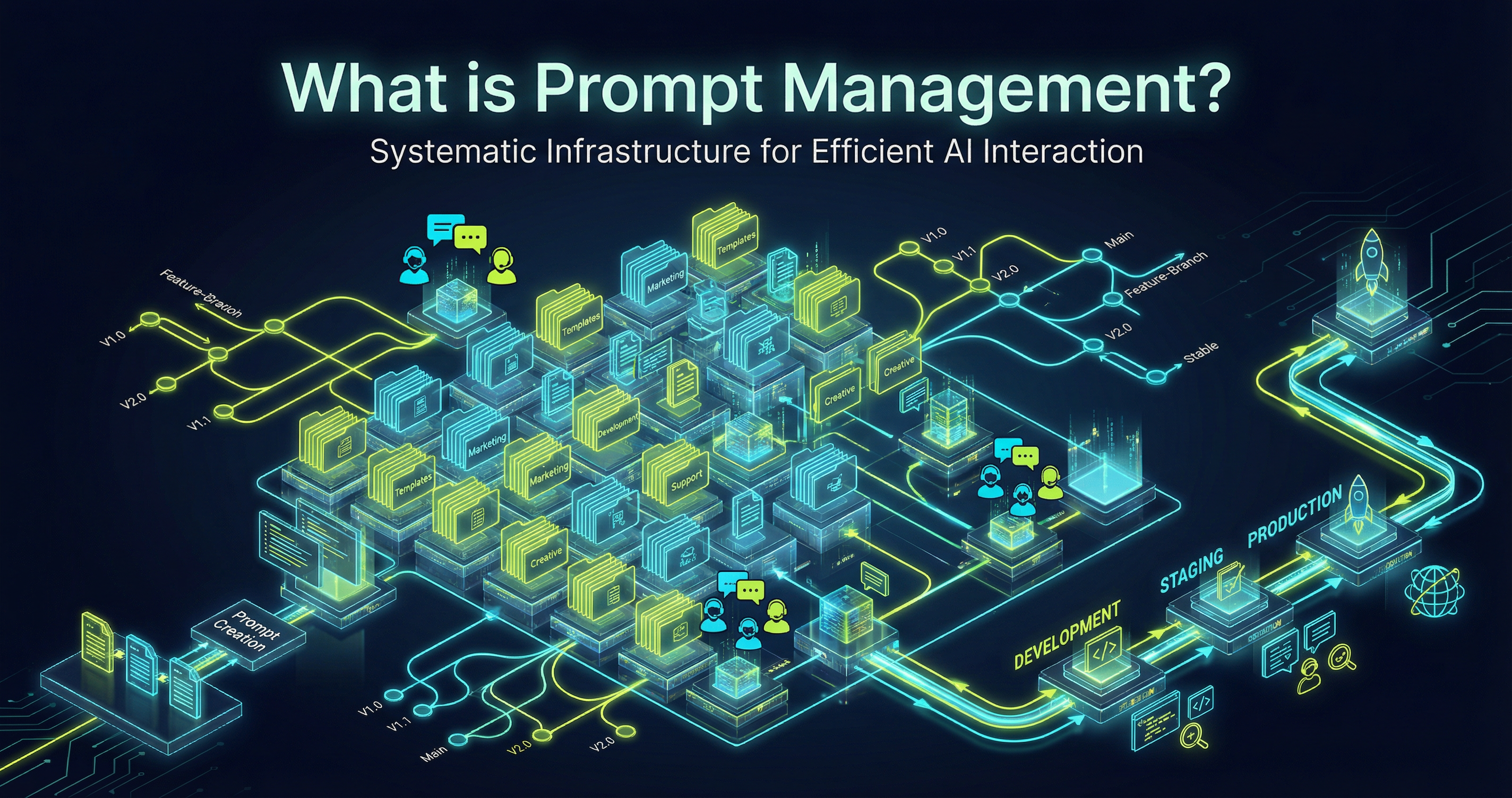

Prompt Version Control: Git for Your AI Prompts