What is Prompt Management? A Complete Introduction

Prompt management applies software engineering discipline to AI prompts through version control, organization, testing, and deployment infrastructure. Teams running AI in production need it to avoid lost knowledge, debugging nightmares, and compliance gaps.

Key Takeaways

- Prompt management is systematic infrastructure for the full prompt lifecycle, not just saving prompts

- 73% of AI teams cite prompt versioning as their top pain point due to lack of proper management

- Core components include version control, organization, access controls, testing, deployment, and analytics

- Teams see 50% faster debugging and 40-60% faster iteration cycles with proper prompt management

- Adopt when shipping AI to production, not after chaos sets in—start with production-critical prompts first

What is Prompt Management? A Complete Introduction

Seventy-three percent of AI teams report prompt versioning as their top pain point. Yet most still treat prompts like throwaway experiments—quick tests in a playground, copied into Slack threads, or buried in scattered notebooks. What started as casual experimentation has quietly become production infrastructure. Your prompts aren't scripts anymore. They're code. And like all code running in production, they need engineering discipline.

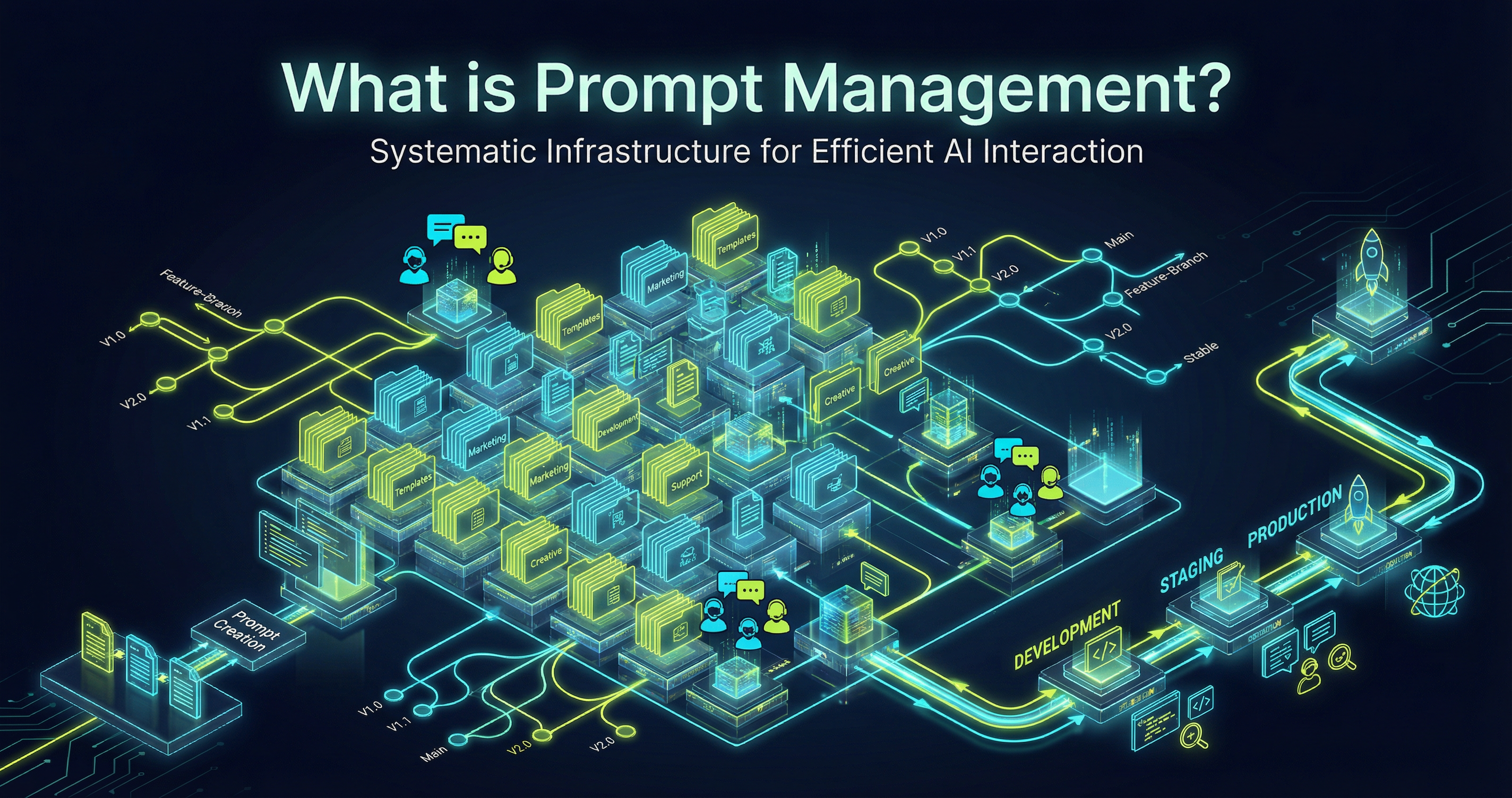

What is Prompt Management?

Prompt management is the systematic approach to creating, storing, versioning, deploying, and governing AI prompts across your organization. Think of it as the full lifecycle infrastructure for prompts—from that first draft in your IDE to the version serving millions of customer requests.

This isn't just saving prompts in a folder somewhere. Real prompt management covers:

- Version control with full diff tracking between iterations

- Organizational structures that let teams actually find what they need

- Access controls defining who can edit, deploy, or view sensitive prompts

- Testing frameworks that catch regressions before customers do

- Deployment mechanisms for promoting changes safely

- Analytics tracking usage, costs, and quality metrics

The distinction matters: prompt engineering is technique. Prompt management is infrastructure.

Prompt engineering teaches you how to write effective prompts—what examples to include, how to structure instructions, which parameters work best. Prompt management answers a different question: how do you run those prompts in production at scale?

This category emerged because LLMs moved from research experiments to business-critical systems. Five years ago, prompts were academic curiosities. Now they power customer support, generate marketing content, analyze legal documents, and write code. Production systems demand production infrastructure. Prompts are code, and deserve the same rigor.

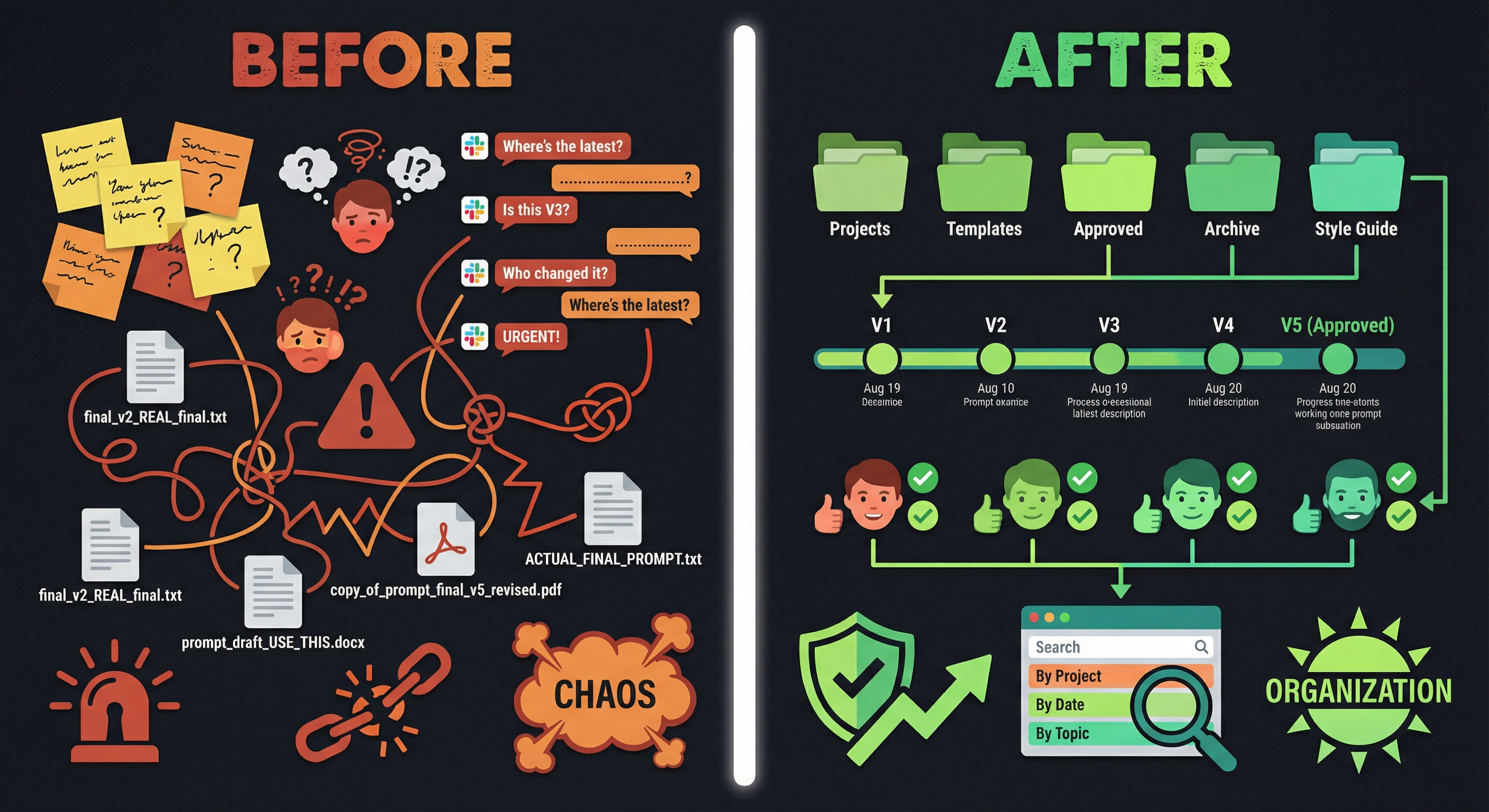

The Problem: Prompt Chaos at Scale

Here's what actually happens without systematic management.

An engineer spends two weeks perfecting a customer support prompt. It handles edge cases beautifully, maintains the right tone, and reduces response time by 40%. Then she leaves the company. That knowledge disappears. Six months later, someone else starts from scratch solving the same problem.

Or this: your production AI starts giving weird responses. Something changed. But what? You have v1, v2, and v3 in different files. Someone made a "quick fix" last Tuesday. Was it deployed? Who approved it? You're debugging blind without knowing what actually changed.

Meanwhile, engineering builds a summarization prompt for technical docs. Marketing builds a nearly identical one for blog posts. Product builds another for user research. Nobody knows the others exist. Each team burns a week solving identical problems independently.

The costs compound:

- Lost institutional knowledge: Engineers perfect prompts that vanish when they move on

- Version control nightmares: Debugging production issues without change history

- Team silos: Multiple teams solving identical problems in parallel

- Token waste: Inefficient prompts burning 30-50% more tokens than necessary

- Compliance gaps: No audit trails when regulators ask "how do you govern AI behavior?"

Teams report spending 5-10x more time managing prompts than improving them. That's the real cost—not the chaos itself, but the opportunity cost of smart engineers fighting infrastructure instead of building features.

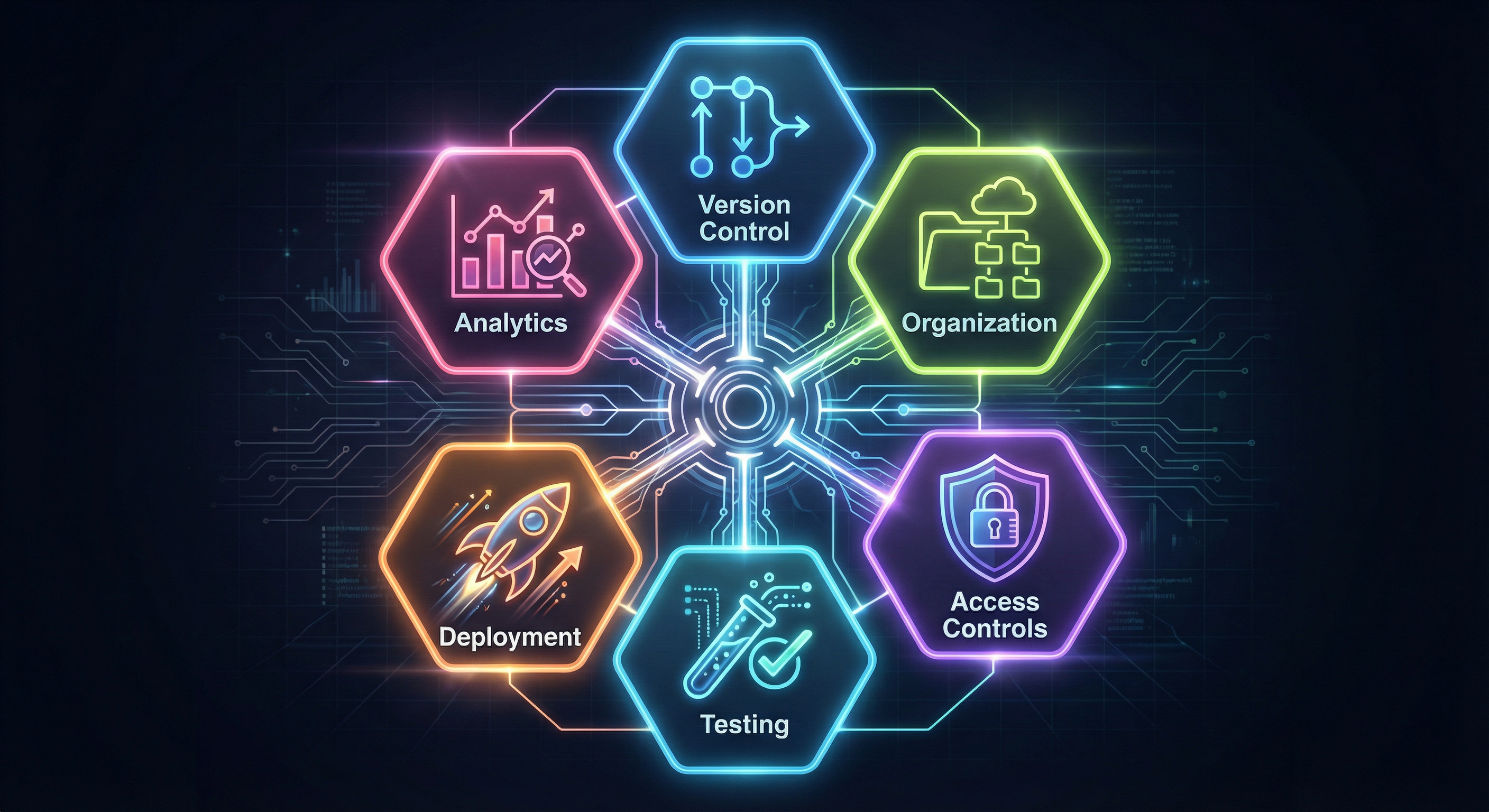

Core Components of Prompt Management

Effective prompt management systems provide six interconnected components:

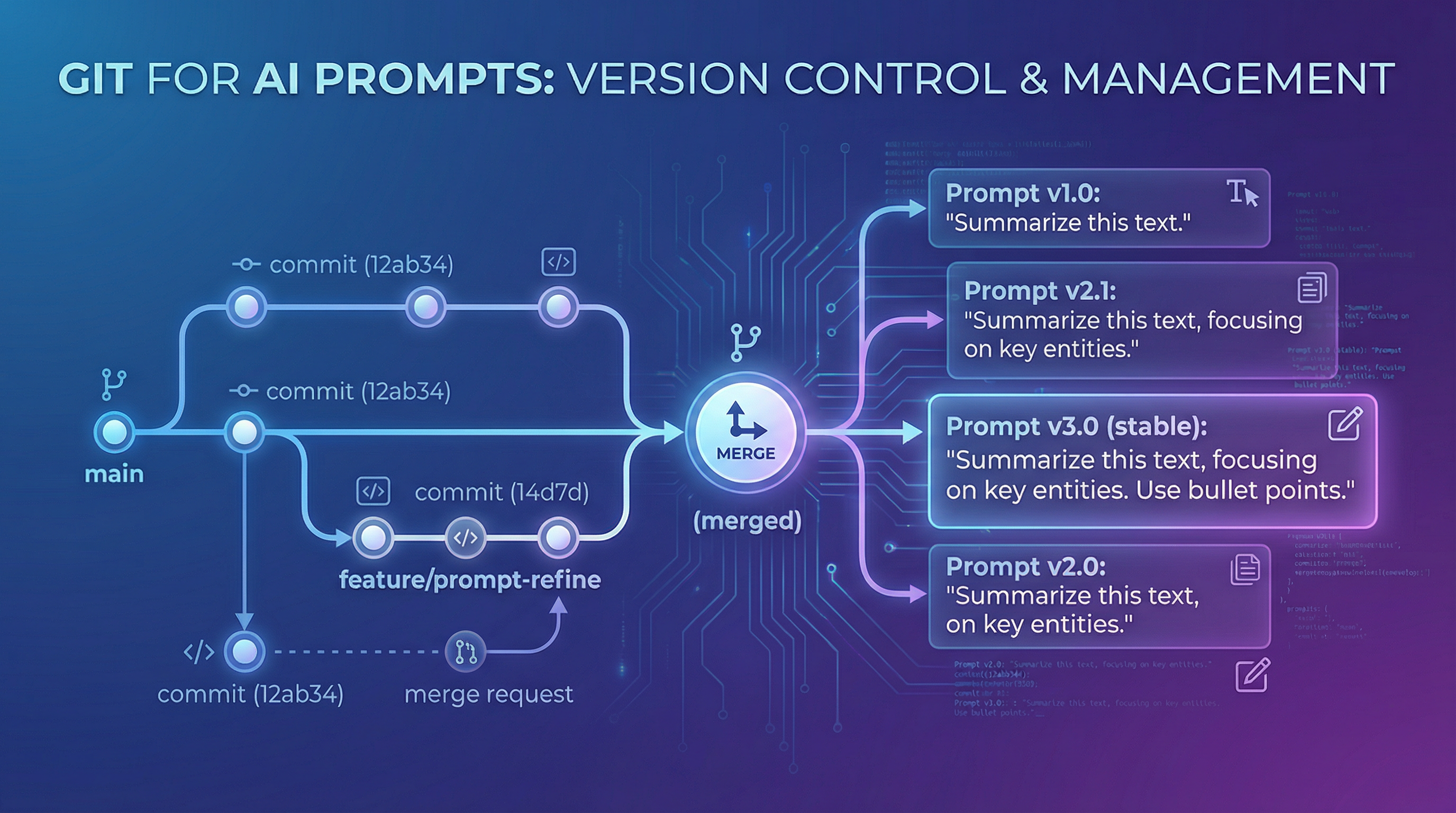

Version Control and History Tracking

Real version control means full diff tracking between iterations, not just files labeled v1, v2, v3. You need to see exactly what changed, when, and by whom. Tools like Promptsy's Version History track every change with complete diff views, letting you compare any two versions and roll back instantly if a deployment goes wrong.

Version control solves the "what broke?" problem. Production issues become tractable when you can pinpoint: this specific change on this date caused that regression.

Organizational Structures

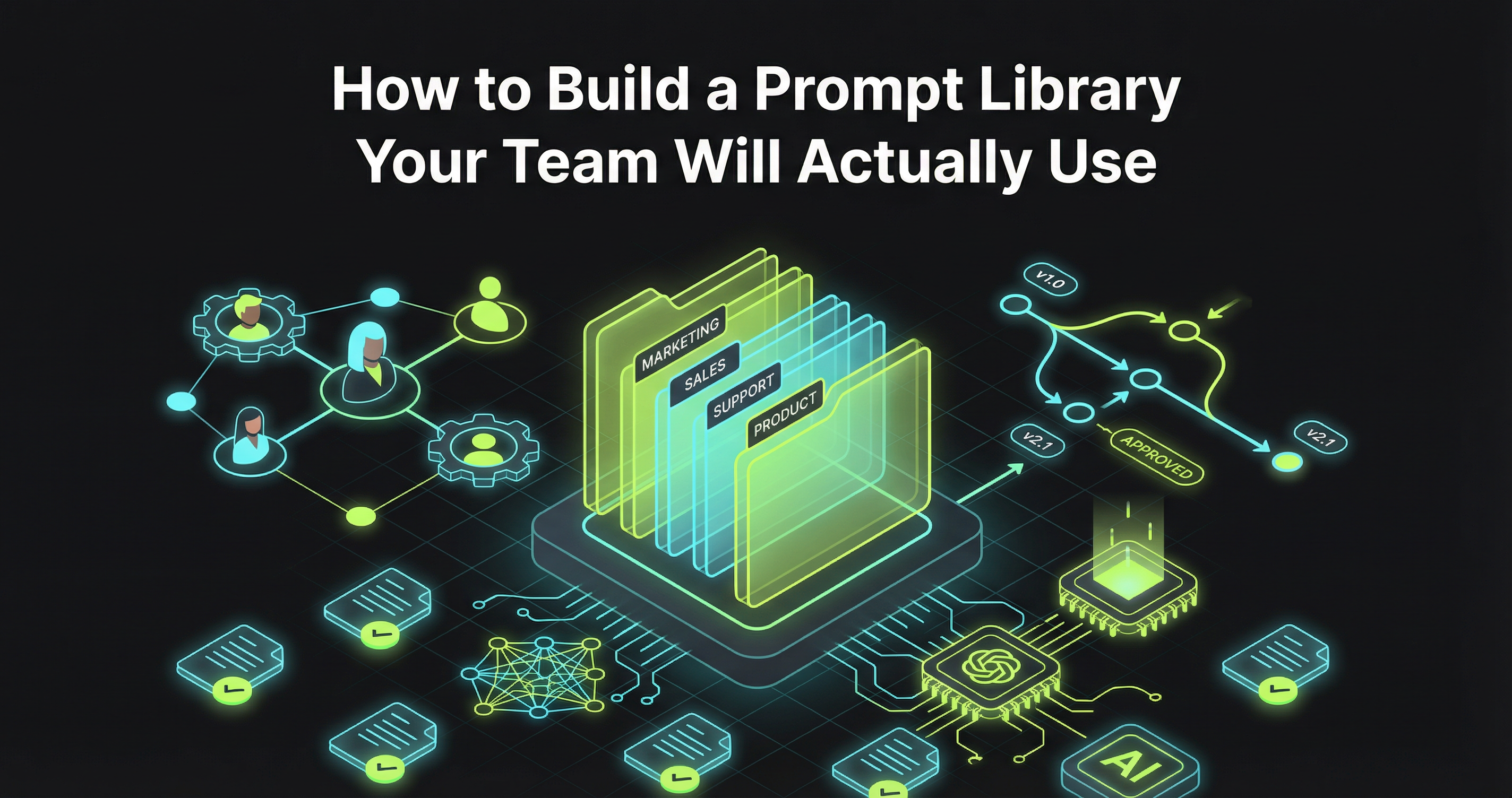

Folders, tags, collections, and taxonomies determine whether teams can find what they need or spend 20 minutes hunting through Slack. The structure matters more than the tool. Organize prompts by project, use case, or team with Collections and Tags in Promptsy.

Good organization increases reuse. Teams with structured libraries report 3x higher prompt reuse compared to ad-hoc approaches. That's 3x less duplicated work.

Access Controls and Permissions

Role-based permissions define who can view, edit, or deploy prompts. Audit logs track all changes. Approval workflows add gates before production deployments. Enterprise teams can use Promptsy's Team tier for shared workspaces, pooled credits, and role-based access controls.

Access controls aren't paranoia—they're about blast radius. Junior engineers should be able to experiment safely without accidentally deploying changes to customer-facing systems.

Testing and Evaluation Frameworks

Automated tests catch regressions before deployment. Run your prompt against a test suite of expected inputs and outputs. Teams lacking evaluation frameworks experience 3x higher regression rates—changes that seem fine but break edge cases customers discover.

Testing prompts feels different than testing code, but the principle is identical: define expected behavior, verify changes don't break it.

Deployment and Rollback Mechanisms

Promote changes safely through environments: development → staging → production. Rollback quickly when something breaks. Deployment mechanisms separate experimentation from production risk.

The ability to deploy confidently and revert instantly determines iteration speed. Teams with systematic deployment see 40-60% faster iteration cycles because engineers aren't terrified of shipping changes.

Analytics and Monitoring

Usage patterns, costs, quality metrics. Which prompts get used most? Which burn excessive tokens? Where do customers hit errors? Analytics turn prompt management from guesswork into data.

Poor prompt management increases operational costs 30-50% through token waste alone. Analytics identify the expensive prompts worth optimizing first.

Why Prompt Management Matters Now

Timing explains urgency. Production AI means production consequences—customer experience, operational costs, competitive advantage. Get prompts wrong and customers notice immediately.

Scale amplifies chaos exponentially. Managing 10 prompts doesn't require infrastructure. Managing 10,000 prompts absolutely does. The tools that worked at small scale break completely at large scale.

Enterprise compliance demands transparency. Governance frameworks require auditability. Only 27% of organizations review all gen AI outputs before use, but regulators increasingly expect clear answers to: "How do you govern AI behavior? Who approved this change? What's your audit trail?"

Prompt management directly impacts team velocity. Systematic management reduces debugging time by 50% on average. Deployment speed increases 40-60%. Engineers spend more time building, less time hunting for "that good version from Slack three months ago."

Cost control matters more as usage scales. Token costs add up fast—poor prompt management can increase operational costs 30-50% through inefficiency. The difference between a 500-token prompt and a 300-token prompt seems small until you're running millions of requests.

Knowledge preservation compounds over time. Capture institutional learning instead of losing it every time someone leaves. Agencies using structured AI prompts report 67% average productivity improvements, largely because teams build on past work instead of restarting.

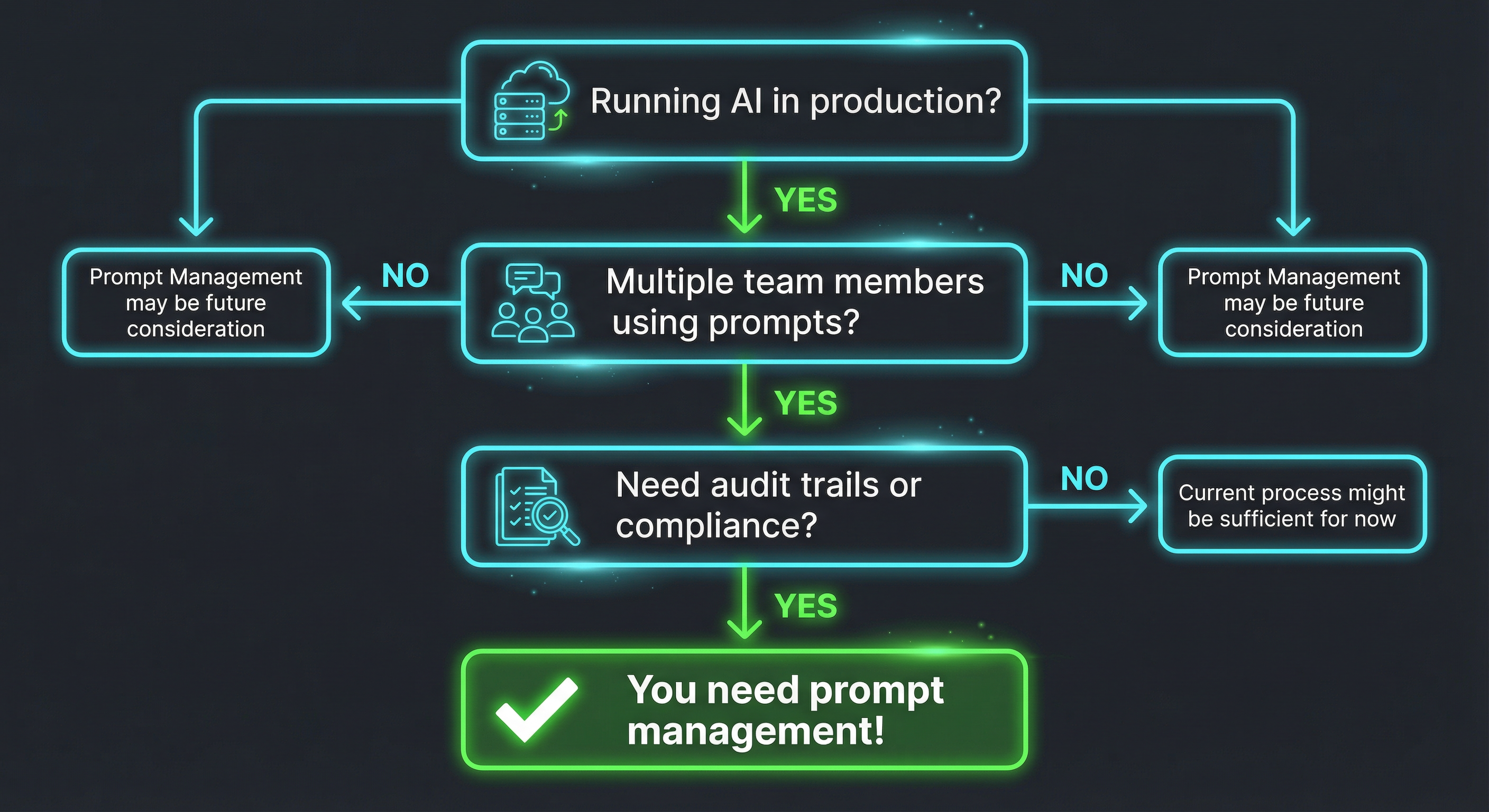

Who Needs Prompt Management?

You know you need this when:

Teams running AI in production. If customers interact with your AI—directly or indirectly—you need prompt management. Customer-facing features demand reliability. Internal tools at scale demand efficiency.

Engineering managers coordinating multiple applications. Once you have more than one AI feature, you have shared problems. Prompt management prevents every team from solving everything independently.

AI engineers tired of prompt archaeology. If you've ever asked "which prompt are we running in production?" or spent an hour reconstructing the good version from memory, you need better infrastructure.

Organizations requiring compliance and audit trails. Forty percent of enterprises cite governance and compliance as the primary driver for adopting prompt management. Regulated industries can't run AI without clear provenance.

Small teams need this too—just simpler versions. A three-person startup doesn't need enterprise approval workflows, but they absolutely need version control and organization. The investment pays back quickly even at small scale.

Timing matters. Adopt prompt management when shipping to production, not after chaos sets in. The best time was before your first deployment. The second-best time is now.

Getting Started with Prompt Management

Start simple. Ship first.

Audit your prompts. Inventory everything across your team. Where do prompts live now? Scattered files, notebooks, Slack threads, hardcoded in apps? Round them up.

Define your taxonomy. Establish naming conventions and organizational structure before migrating prompts. How will you categorize? By product, feature, team, use case? Pick a system that matches how your team thinks.

Establish workflows. How do changes happen? Who reviews? What gates exist before production? Document the process even if it's simple: "engineer proposes, tech lead reviews, deploys to staging, then production."

Choose your tooling. Build vs. buy depends on team size and requirements. Building custom infrastructure makes sense for large engineering orgs with specific needs. Most teams should buy—the opportunity cost of building prompt management infrastructure outweighs the subscription cost. Tools like Promptsy's Prompt Library let you organize prompts with folders, tags, and collections, making it easy to find and reuse what works.

Implement incrementally. Start with production-critical prompts first. Don't try migrating everything at once. Get the high-stakes prompts under management, prove the value, then expand.

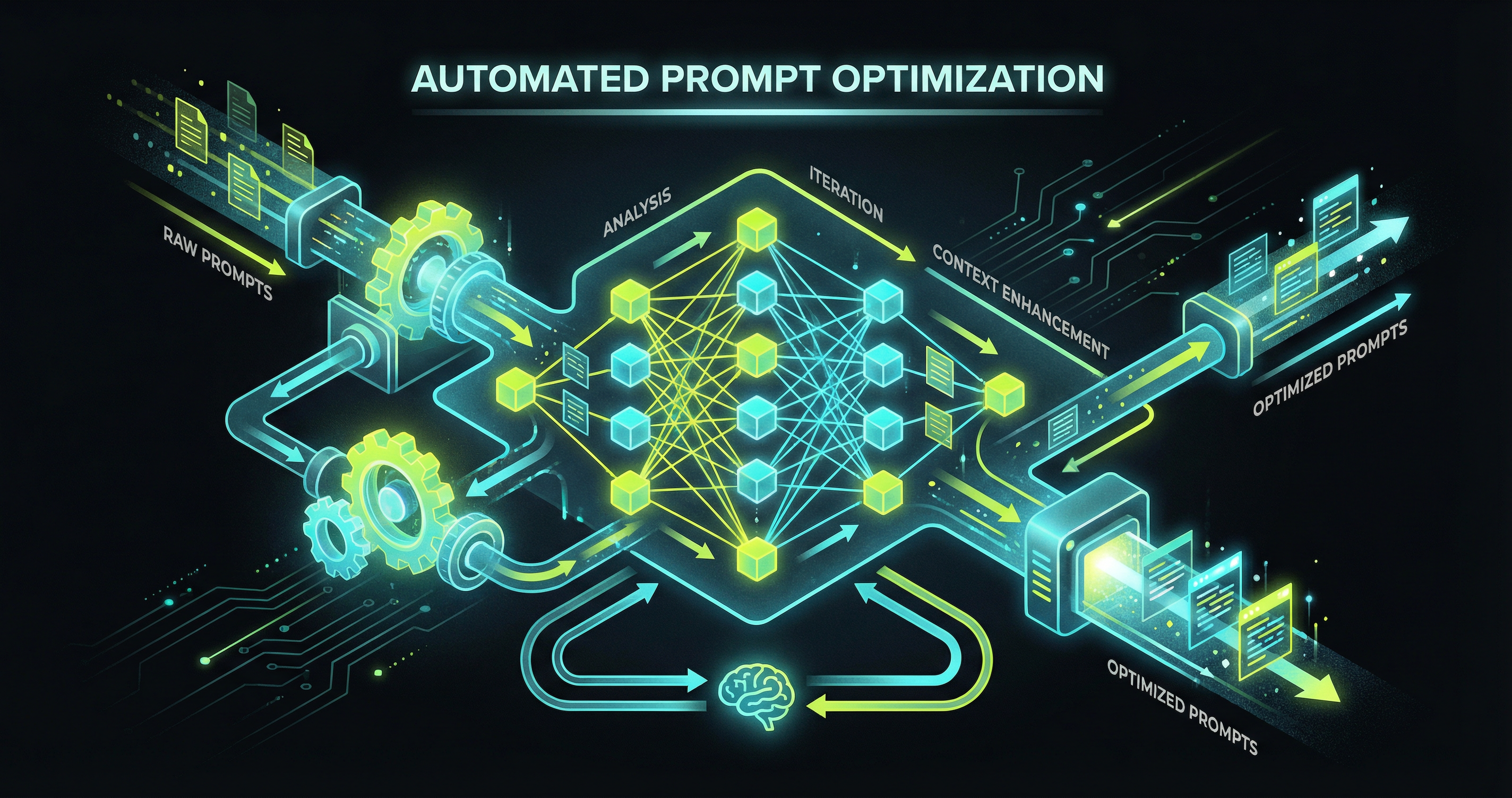

Refine over time. Once you have prompts organized, tools like Promptsy's AI Optimization can help you refine them—reformatting for clarity, expanding with examples, or tightening for token efficiency. Promptsy's public sharing lets you share proven prompts with your team via simple links, no login required.

Measure success. Track metrics that matter: time-to-deploy for prompt changes, debugging time when issues occur, reuse rates across team, rollback frequency. Good infrastructure should make these numbers improve steadily.

The goal isn't perfect architecture. The goal is shipping AI products faster and more reliably.

Frequently Asked Questions

What's the difference between prompt engineering and prompt management?

Prompt engineering is the craft of writing effective prompts—the techniques, patterns, and best practices for getting LLMs to produce quality outputs. Prompt management is the infrastructure for running those prompts in production—version control, organization, deployment, and governance. You need both. Great prompts without management create chaos. Great infrastructure with terrible prompts doesn't help either.

When should we adopt prompt management?

Adopt when shipping AI to production, not after chaos forces your hand. Clear signals you need it: multiple team members working with prompts, customer-facing AI features, difficulty tracking what's deployed, time wasted searching for prompts, or compliance requirements. Don't wait for disaster. Set up basic infrastructure before your first production deployment.

How is prompt management different from code management?

The principles are similar—version control, testing, deployment. The details differ. Prompts change more frequently than code. Testing is less deterministic (LLMs aren't perfectly reproducible). Evaluation requires different tooling (measuring output quality vs. pass/fail tests). Non-engineers often need to edit prompts (product managers, content writers), requiring different access patterns than code repos. But the core insight holds: prompts are code and deserve similar discipline.

Do small teams need prompt management?

Yes, but simpler versions. A three-person team doesn't need enterprise approval workflows or role-based access controls. They absolutely need version control ("what changed?"), organization ("where is it?"), and basic deployment discipline ("what's in production?"). Start minimal. Add complexity only when pain demands it.

What's the ROI timeline?

Most teams see measurable returns within weeks. Immediate benefits: stop losing work, reduce debugging time, eliminate duplicate effort. Longer-term benefits compound: faster iteration, lower costs, preserved knowledge. Teams report 50% debugging time reduction and 40-60% faster iteration cycles—those gains start accruing immediately after adoption.

Can we use existing version control tools like Git?

You can, but Git wasn't designed for prompt workflows. Challenges: non-technical team members struggle with Git, diff viewing for natural language isn't optimized, testing and evaluation require separate tooling, deployment to AI applications needs integration work. Some teams make Git work. Most find dedicated prompt management tools fit the workflow better. Pick based on your team's technical comfort and specific needs.

What Comes Next

Prompt management shifts AI development from chaos to engineering discipline. The 73% of teams struggling with prompt versioning aren't making mistakes—they're using tools designed for different problems. Once prompts become production infrastructure, they need infrastructure tooling.

The core components—version control, organization, access controls, testing, deployment, analytics—mirror software engineering because prompts are software. The teams shipping AI fastest aren't necessarily the ones with the best models or the cleverest prompts. They're the ones with the best infrastructure.

Start small. Audit your prompts, pick an organizational structure, establish basic workflows, choose appropriate tooling, and implement incrementally. The goal isn't perfection. The goal is shipping AI products reliably while preserving what your team learns along the way.

Production AI demands production discipline. Prompt management provides it.

Stay ahead with AI insights

Get weekly tips on prompt engineering, AI productivity, and Promptsy updates delivered to your inbox.

No spam. Unsubscribe anytime.